Now we can add a third-what happens on your iPhone no longer stays on your iPhone. Pegasus raised two serious concerns-that Apple’s ecosystem, including iMessage, has dangerous vulnerabilities, and that Apple’s opaque communications and “black box” security made for a very unhealthy mix. No-one saw that coming.Īpple’s timing is dreadful.

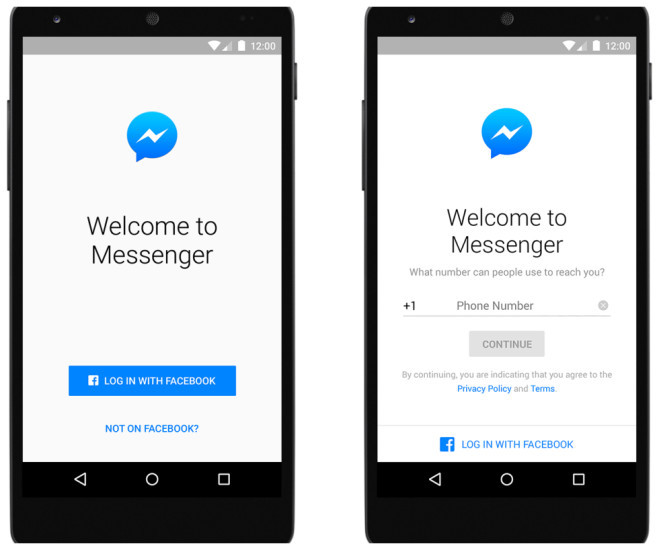

But Apple using technology to snoop on our private, seemingly encrypted iMessages? Apple being the first to introduce client-side content analysis on a flagship, end-to-end encrypted messenger. Fears that WhatsApp might share our data with Facebook were bad, albeit predictable. And the consequences of this first move need to be understood.Īnd so, yet again, we are left with the sinking feeling that nothing is as it should be.

Screening iCloud Photos on your iPhone is one thing, but adding client-side screening of any kind to iMessage on your iPhone is quite another.

We all want to see technology deployed to tackle abuse, and I have suggested that Facebook reverse plans to encrypt Messenger for this reason, but breaking existing end-to-end encryption is simply that. This intrusion is growing with intensity and often packaged in a way that is for the greater good.” The secondary concern, however, is that it highlights the power in which Apple holds with the ability to read what is on devices and match any images to those known on a database. “The initial potential concern is that this new technology could drive CSAM further underground,” warns ESET’s Jake Moore, “but at least it is likely to catch those at the early stages of their offending. “We want to help protect children from predators who use communication tools to recruit and exploit them,” Apple says, “and limit the spread of Child Sexual Abuse Material.” This is much less controversial-online photo services already screen content for CSAM. Apple is also launching an on-device screener for photos that users send to iCloud, hashing images to check against content flagged by law enforcement.

0 kommentar(er)

0 kommentar(er)